Most web scrapers are made up of two core parts: finding products on the website and actually scraping them. The former is often referred to as "target discovery" step. For example to scrape product data of an e-commerce website we would need to find urls to each individual product and only then we can scrape their data.

Discovering targets to scrape in web scraping is often a challenging and important task. This series of blog posts tagged with #discovery-methods (also see main article) covers common target discovery approaches.

In this article will cover one particular discovery method of using website sitemaps to find our scrape targets.

What are sitemaps and how are they used in web-scraping?

Sitemap is an index document generated by websites for web crawlers and indexers. For example websites that want to be crawled by google provide an index of their products so Google's crawlers can index it quicker.

To put it shortly sitemap files are always of xml type (often gzip compressed) documents that contain URL locations and some meta information about them:

1 2 3 4 5 6 7 8 9 | |

for more on sitemap structure rules, see official specification page

The documents themselves are usually categorized by names, so for example:

- blog post of the website would be contained in

sitemap_blogs.xml. - Sold products might be separated in multiple files of

sitemap_products_1.xml,sitemap_products_2.xmletc

Before using sitemaps a web scraping discovery strategy, it's a good practice to reflect on common pros and cons of this technique and see whether that would fit your web-scraping project:

Pros:

- Efficiency: Single sitemap can contain thousands of items and often entire catalog can be discovered in just few requests!

- Simplicity: There's no need for advanced reverse engineering knowledge to use sitemap based discovery.

Cons:

- Data Staleness: Sitemap indexes need to be generated by the website explicitly and sometimes newer product might not appear on the index for significant amount of time.

- Data Validity: As per previous point because of sitemap staleness some product links might be expired or invalidated. This might cause unnecessary load on your scraper.

- Data Completeness: Since sitemaps are generated for crawlers and indexers they might not have all data that is available on the website. For this reason it is important to confirm sitemap coverage during the development of a scraper.

- Availability: Sitemaps is/used to be an important part of the web, particularly used in SEO however they are not always present in modern websites that either try to avoid web-scraping or use hard-to-index website structures or are just too big for such indexes.

- Risk: Some website use sitemaps as honeypots for web-scrapers and direct to invalid data or use it to identify and ban scrapers.

As you can see, Sitemaps discovery approach appears to be simple and efficient, though not always viable. Generally when developing discovery strategy, sitemaps is the first place I look for product data, then confirm quality by trying alternative discovery approaches and seeing if coverage matches.

Finding Sitemaps

To take advantage of sitemaps, we first need to figure how to find them. Common way to find sitemaps is checking robots.txt or sitemaps.xml file.

For example, let's take popular clothing shop hm.com:

First we would go to /robots.txt page: https://hm.com/robots.txt:

1 2 3 4 5 6 7 | |

We see some robot scraping rules and a link to the sitemap index! If we proceed and take a look at the sitemap index http://www2.hm.com/sitemapindex.xml we can see:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

The index is split into localized parts, let's continue to en_us index (or whichever you prefer, they should function the same): https://www2.hm.com/en_us.sitemap.xml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

This is index of sitemap indexes. We see there are indexes for articles, pages and categories etc. - but most importantly product index: ....products.N.xml.

To add there's some important metadata as well: when indexes were last updated: <lastmod>. In this case the index is 1 day old so this discovery approach will not pick up any products that have been added in the last few hours.

Every website engine generates sitemaps at different times: some generate once a day/week often indicated by <changefreq>always|hourly|daily|...</changefreq> attribute. Though modern, smaller websites usually generate it on demand when product index is updated which is great for web-scrapers!

Example Use Case: HM.com

Lets write a simple sitemap scraper that will find all product urls on previously mentioned website https://hm.com. For this we'll be using python with requests and parsel libraries:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | |

If we run this small scraper script we'll see that this sitemap discovery approach will yield us 13639 unique results (at the time of writing)! Even if we scrape synchronously sitemap approach is a really efficient way to discover large amount of products.

Confirming Results

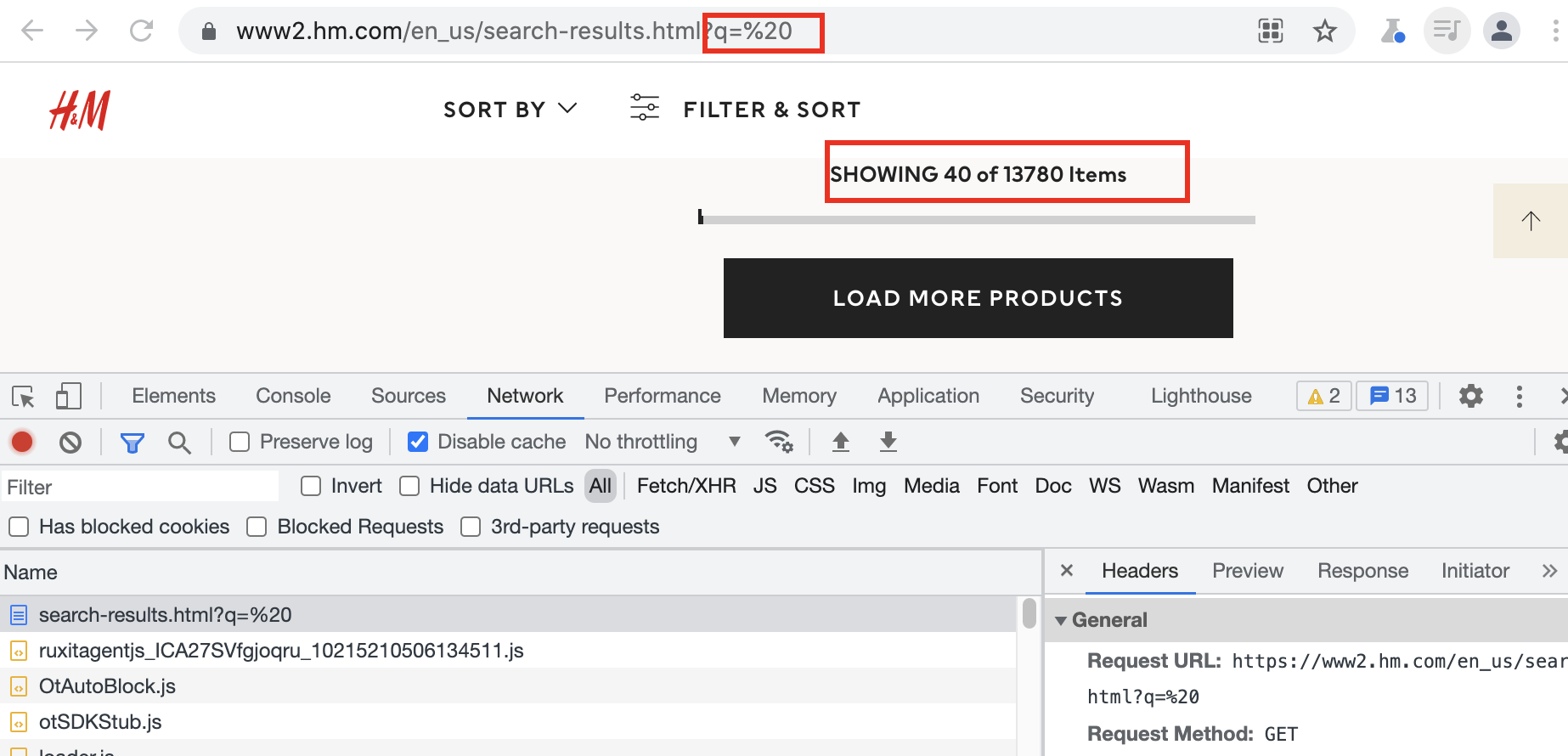

Finally we should confirm whether this discovery approach has good coverage by comparing it with other discovery approaches. For that we either need to find all product count number somewhere (some websites mention "total N results available" somewhere in their content) or use another discovery strategy to evaluate our coverage. For this particular website we can take a look at search bar discovery approach covered in other Scrapecrow article:

%20

Using empty search approach described in search discovery article we can see that our sitemap discovery coverage showing almost the same amount of results:

- Sitemaps: 13639

- Searchbar: 13780

These 141 results we're missing are probably indication that sitemap index is running slightly behind the product database. This is a good illustration of different discovery techniques and their importance. For important scrapers it's a good idea to diversify.

Summary and Further Reading

To summarize using sitemaps in web scraping is an efficient, effective and quick product discovery technique with only real down-sides being data staleness, coverage and availability.

For more web-scraping discovery techniques, see #discovery-methods and #discovery for more discovery related subjects.

If you have any questions, come join us on #web-scraping on matrix, check out #web-scraping on stackoverflow or leave a comment below!

As always, you can hire me for web-scraping consultation over at hire page and happy scraping!